Now that Adobe’s Generative Fill is Out of Beta, Here are Some things you should know

Unless you’ve been living under a rock, you’ve undoubtedly heard of Adobe’s “generative fill” for photoshop. The feature, which uses Adobe’s firefly AI engine to add AI-generated imagery to your photos or artwork directly in photoshop, has been in beta for a while and has been covered extensively on magazines, websites and various social platforms. It had been part of the Photoshop 25 beta version, but Photoshop 25 has now officially been released, and Adobe firefly in general is no longer in Beta. Now that it is no longer test software, I wanted to share my experience, and address a couple of big issues with generative fill.

Sensei vs. Firefly

If you’re wondering what Firefly is – it is the name for Adobe’s generative AI engine. It’s what Adobe has decided to call the set of services and the engine used to generate imagery across the variety of applications it supports. This seems to be a distinctly separate AI service from Adobe’s other artificial intelligence offering: Adobe Sensei. Sensei seems more focussed on image recognition, whereas Firefly is based on image generation.

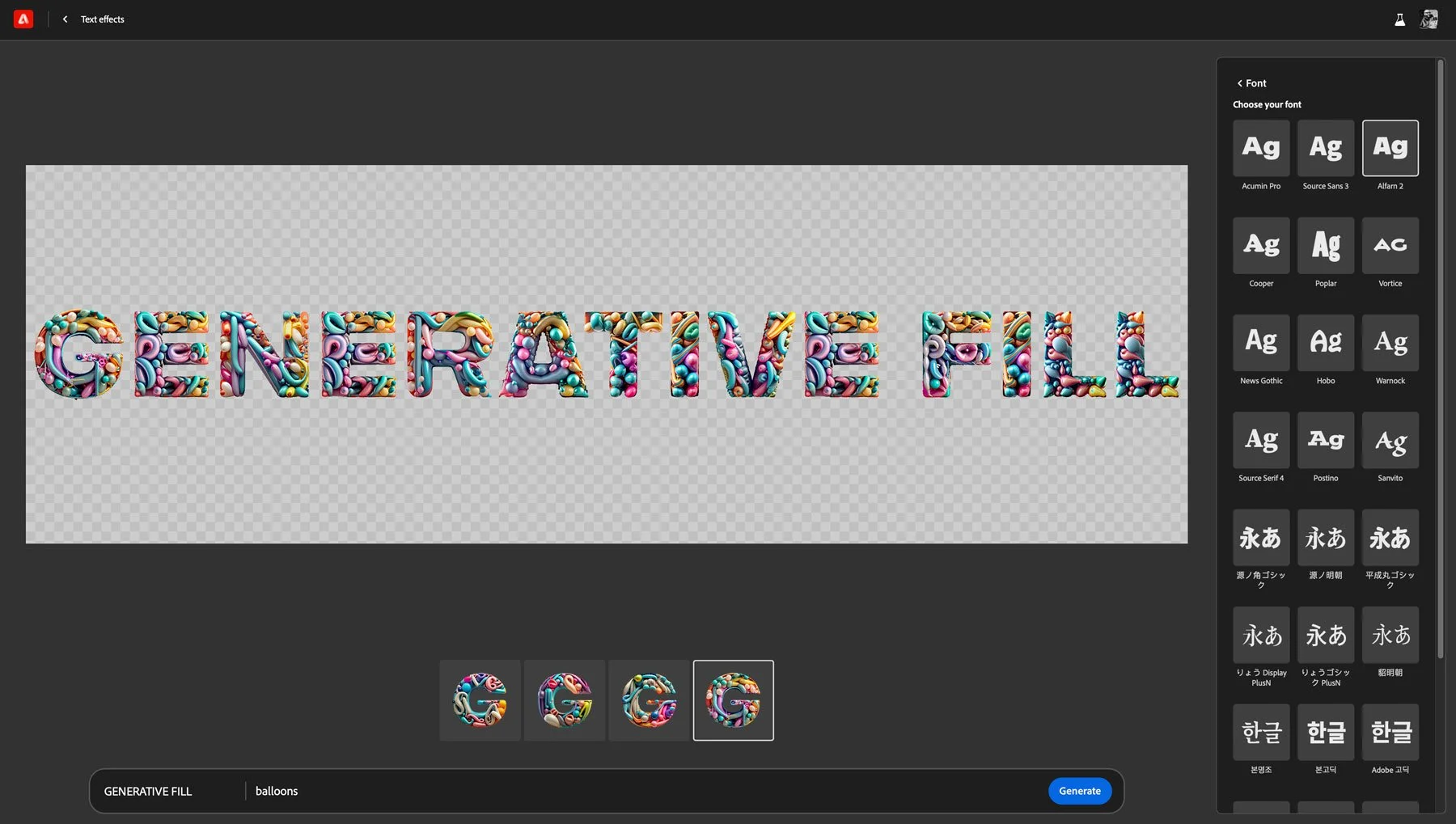

Adobe Firefly’s text generation function available on the web

The Good and the Bad

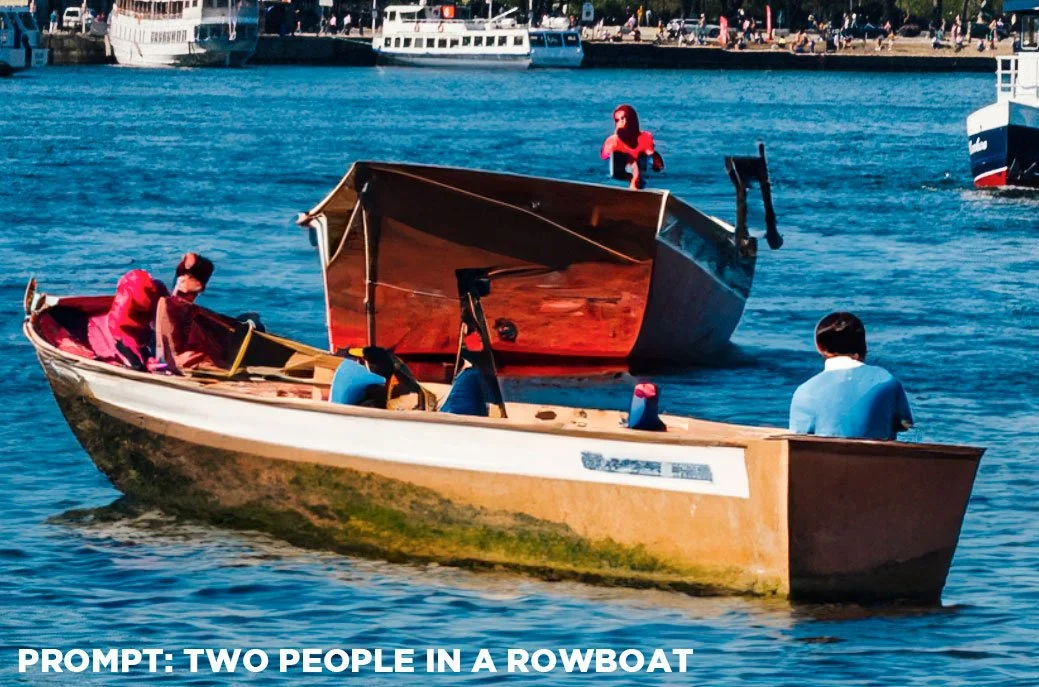

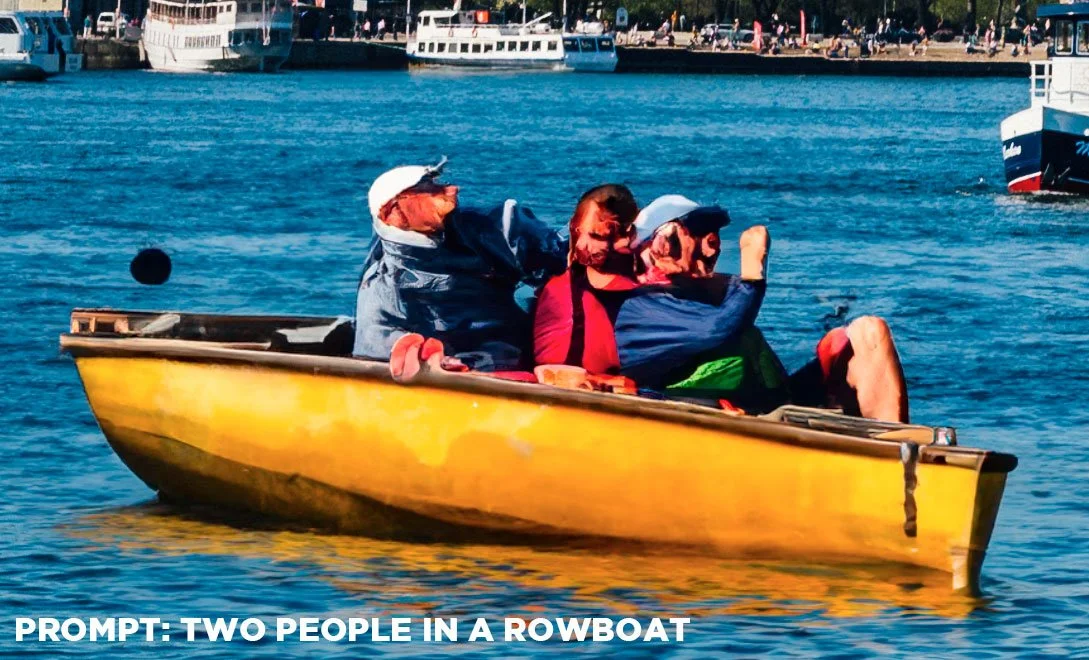

There’s no doubt that Adobe’s generative fill has generated (ha!) a lot of hype over the past few months, and it absolutely can produce amazing results. However, I also think that in some cases the hype has been overplayed, especially by people milking it for social media engagement. It is a powerful tool when it works well, but it has lots of limitations right now. In my personal experience, it has not remotely lived up to the hype when adding things to a scene. It has been better at removing things, however. In fact, when preparing screenshots to go with this article, I couldn’t get it to generate something to add to the scene that I could put in the good pile. It did make me laugh, though.

Generative fill can be really impressive, at times almost magical. Like other generative AI engines, it uses machine learning to take a text prompt and turn it into imagery. The results can vary from the freakishly accurate to the just freakish. You can use it to add all sorts of components to your scene, from animals, to buildings, change scenery and so on. Occasionally, this works really well, and other times it gives you the stuff of nightmares.

When I prompted it to add “two people in a rowboat” to the scene

Second attempt. This time it looks line one of the people is a dog, and the other are two people merged together in some weird way

Probably the more useful aspect of this tool is to both erase objects and to expand images. You can use generative fill like a version of contextual aware fill on steroids, and it can produce almost perfect results, with one exception which I will get to shortly. They also added generative expand to the crop tool, meaning you can expand the borders of your image and generative fill will try to fill in the blanks. This sometimes works very well, and other times, it just doesn’t.

And there is the first problem with generative fill. Like most other AI tools, it either works or it doesn’t. While there are people online who will convince you it’s just a matter of getting the prompt right, there are times when no amount of coaxing will get it to do what you want. It’s like rolling dice or playing darts. You can keep regenerating in the hope that something will come up that you want, but in some cases, it just won’t deliver for you.

The second issue is that most of the time the resolution of the generated imagery won’t match the actual image. It is often quite low resolution, upscaled to match your photo. This limits much of its use, especially for generative expand, as the expanded image will be at a much lower resolution than the rest of the photo. Currently, based on my experience, generative expand is really only suitable for screen resolution images. You may have better results with generative fill, but that can be quite soft at times too. One piece of good news, though, is that Adobe has said that it will add higher resolution imagery in the future.

Original image before expansion

Image expanded with generative expand

The resolution difference between the original and the generated image

For now, one work around may be to use smaller areas. Because the resolution of the generated area is limited, I’ve seen several tips that suggest using it in sections to fill a larger area can give you better results. I’ve tried this myself and the results were mixed but it sort of works.

There will be a price to pay – sort of

While this may sound like a commentary on the long-term effect AI is having on society, in this case I mean it quite literally. Adobe is going to charge for using its AI tools – well, sort of. I’m sure some people will be quite angry about this, but it is understandable from Adobe’s part, as these functions use a considerable amount of processing power on Adobe’s servers. Any use of Firefly, across any of the available applications, will be based on a credit system. You will get a number of credits with your subscription, but outside of that, additional credits will need to be purchased. For example, if you have the App apps subscription, you will get 1000 generative credits per month.

Depending on your plan, the AI functions like generative fill will still work (apparently) even if you run out of credits, but they will run at a low priority, and so will basically be speed throttled. How much of a difference this will make is yet to be seen, as this hasn’t come into effect yet, but this does kind of take the shine off the new features. If you are on one of the free app plans (using adobe express for example) when you use your credits, you will then only be ables to use generative tools twice per day. It’s also important to note, that unused credits will not roll over month to month.

If this all sounds incredibly confusing, you are completely correct. In fact, I’d like to add the disclaimer that I may well have misunderstood or gotten some of these details wrong. You can find out more details of how the credit system will work on Adobe’s website.

Conclusion

It’s kind of hard to believe that the AI image generation revolution has only been around such a short time, considering the media coverage. Midjourney, for example, probably the best of the current generative AI tools, has only been around since 2022. The first version of Dall-E was launched in 2021, and didn’t really gain traction until Dall-E 2 was released in 2022. So it’s important to remember that this technology is incredibly new. In a few years, who knows where this might take us. Adobe’s generative fill is capable of some incredible results, but also some terrible ones. It’s important to temper expectations accordingly, and also to remember that this is only the very earliest days of generative AI technology.

Help Support the Blog

Buy from our affiliates

If you want to help support our blog, you can do so if you buy anything from our affiliate partners:

If you buy through the above links, we get a small commission, which helps run this site.

Check out my Capture One Style Packs, Lightroom Presets and eBooks

If you’re looking for some Film Effect, or black and White style packs for Capture One, Presets for Lightroom or Photography related eBooks, check out my Gum Road Store.

Buy me a coffee!

If you want to say thanks or help, then you can feed my caffeine habit and buy me a coffee via PayPal with a one off donation to my PayPal tip jar. (Please note that PayPal doesn’t make it easy to respond to these so just know you are thanked in advance)

Join our Facebook Group

If you want to discuss anything you’ve read here on my website, or saw on my youtube channel, or if you want to share images you’ve created using any of my techniques or presets, then I’ve started a new Facebook Group just for that.

Note that this post contains paid affiliate links. We get a small commission for purchases made through these links, which helps run this site.